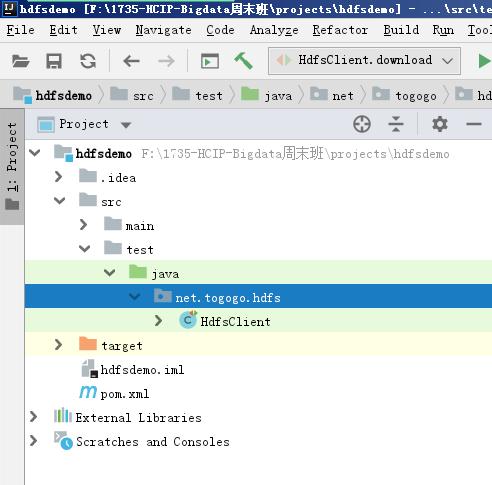

工程目录

导入Maven的依赖包

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.0.0</version>

</dependency>

</dependencies>

packagenet.togogo.hdfs;

importorg.apache.hadoop.conf.Configuration;

importorg.apache.hadoop.fs.FileSystem;

importorg.apache.hadoop.fs.LocatedFileStatus;

importorg.apache.hadoop.fs.Path;

importorg.apache.hadoop.fs.RemoteIterator;

importorg.junit.Before;

importorg.junit.Test;

importjava.io.IOException;

importjava.net.URI;

publicclassHdfsClient{

privateFileSystemfs=null;

@Before

publicvoidinit() {

System.out.println(" init methon start ....");

try{

Configurationconf=newConfiguration();

//连接集群的地址

URIuri=newURI("hdfs://192.168.20.32:9000");

fs=FileSystem.get(uri,conf,"hd");

System.out.println("FileSystem---->"+fs);

} catch(Exceptione) {

e.printStackTrace();

}

System.out.println(" init methon end ....");

}

@Test

publicvoidlistRoot() {

try{

System.out.println("listRoot method ....");

//访问 hadoop fs -ls /

RemoteIterator<LocatedFileStatus>it=fs.listFiles(newPath("/"),true);

//循环

while(it.hasNext()) {

LocatedFileStatuslf=it.next();

System.out.println(lf.getPath().getName());

}

fs.close();

} catch(Exceptione) {

e.printStackTrace();

}

}

@Test

publicvoidmkdir() {

try{

Pathpath=newPath("/test0831");

fs.mkdirs(path);

fs.close();

} catch(IOExceptione) {

e.printStackTrace();

}

}

@Test

publicvoidupload() {

try{

Pathsrc=newPath("F:/hello.log");

Pathdst=newPath("/");

fs.copyFromLocalFile(src,dst);

} catch(IOExceptione) {

e.printStackTrace();

}

}

@Test

publicvoiddownload() {

try{

Pathsrc=newPath("/hello.log");

Pathdst=newPath("F:/dsthello.log");

fs.copyToLocalFile(src,dst);

} catch(IOExceptione) {

e.printStackTrace();

}

}

}

微信扫码关注公众号

获取更多考试热门资料